Introduction

In software product history, the hardest aspect has always been writing code. From methodologies like Scrum, to disciplines like DevOps, and tools like JIRA, so much of it is designed to make writing code faster, safer, and cheaper.

In a way, AI hasn’t caused a revolutionary shift in product development; the changes it seems to have brought about were already in motion well before LLMs ever existed commercially. What AI did do was make painfully obvious the changes that have been happening in the last 10 years or so, simply by increasing the rate of these changes.

The biggest constraint of product development has fundamentally moved to figuring out what to build, not how to build it. It explains why a 2025 study of 10,000 developers found that individual task speed improved with AI tools but organizational metrics did not change, and a separate randomized trial found that experienced developers were 19% slower on complex tasks with AI assistance than without. The benefits of AI are real, but they aren’t realized automatically, especially in the way that we organize, the way that we operate, and the way we treat the knowledge and experience we’ve acquired as dogma.

Trouble Has Been Brewing

Sprints

When implementation is no longer the bottleneck, sprint capacity fills faster. Teams can finish the planned work earlier and spend more of the sprint on lower priorities. In this situation, the sprint itself is not the problem: the lack of discovery work and outcome accountability is. With AI, that deficiency becomes impossible to hide through rituals and process inefficiency chalked up as Agile Manifesto failures. As Sonya Siderova put it, “Agile isn’t dead. It’s optimizing a constraint that moved.” Agile’s principles, short cycles, customer feedback, response to change, are more important than ever before. It’s the ceremonies that have even less value now.

Discovery

Jason Yip’s CD3 framework rings more true now because of the change in bottlenecking, and emphasizes the cost of getting discovery wrong. If a team can ship five features in the time it used to ship two, it could also waste five times the effort on the wrong problem. Discovery is now the primary load-bearer instead of shipping.

Governance

DevOps built automated safety into deployment in the last 10 years. Unfortunately, we don’t have the same safety net in discovery and design, because the assumption was that human judgment would cover it. AI code generation breaks that fundamental assumption. Automation to review AI output is no longer optional. It’s necessary minimum overhead, not just for process efficiency but because regulatory requirements will mandate it. The EU AI Act entered enforcement in August 2025, and NIST published the AI Risk Management Framework. Governance needs to be built into modern product development process by default, end to end.

The Fluid Lifecycle

The following is a proposal built on the work of several institutions and individuals who introduced terminology I’m using here to reshape our approach towards product development without abandoning the tried and true lessons we know today.

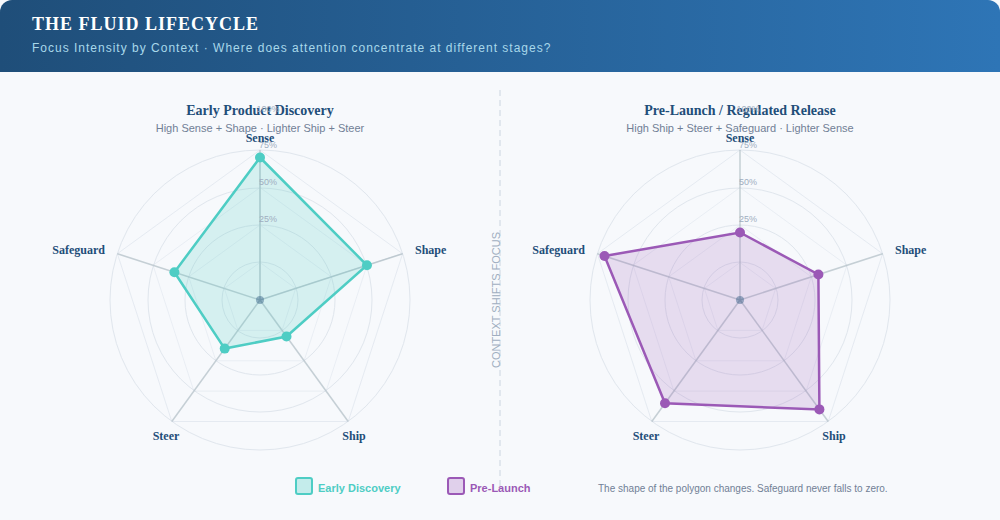

The Fluid Lifecycle replaces sequential phases with five flows that run concurrently. In practice, a team’s attention shifts between flows based on what the work demands, not what the calendar says.

Sense

Continuous practice of understanding which problems are worth solving

Instead of a discovery sprint or project that gates delivery, a standing commitment to regular customer contact, signal review, and opportunity evaluation is necessary to run continuously. AI speeds up the synthesis side, whether it’s clustering feedback, querying analytics, or gathering and summarizing research. What it cannot speed up is the judgment of which signals matter or the human understanding of why users behave the way they do. Having a strong Sense flow at all times reduces the issue of discovery being the primary bottleneck.

Shape

Continuous co-creation of solutions

AI reduces the cost of generating options. A team can explore multiple approaches in the time it previously took to produce one. The risk is confusing option generation with decision quality. Fast iteration without clear success criteria produces a lot of output and very little problem solving. Shape works when there is a clean problem statement coming from Sense and clear outcome criteria coming from Steer. Jason Yip’s point applies directly here: build a solution toolbox independently of specific problems. Know what is possible technically and behaviorally so the team has ready approaches when problems surface, rather than starting from scratch each time.

Ship

Build, Test, Deploy

AI has perhaps changed this flow more visibly than any other, and attracted more (over)confidence. The issue is that AI cuts time on well-specified, bounded problems. It does the opposite when trying to understand existing code, debugging subtle failures, or making long-term decisions about architecture. And while AI will inevitably improve over time at addressing even these difficult problems, human judgment is still necessary to go to production. This means the Ship flow requires small pull requests from AI generation, automated security scanning, well-maintained test coverage, and extensive documentation treated as a primary artifact, not a nice-to-have. Teams with poor Ship flow will end up with codebases that demo well and fail in production.

Steer

Continuous check on whether what shipped solved the problem

Steer addresses the shift from verification to validation. We no longer care only if it does what we specified; we care if it did what we needed. Many teams measure outputs like features shipped, velocity, sprint completion. Steer measures outcomes: did user behavior change to what the team wrote down as “what success looks like.” Steer flow encourages this re-centering on the highest-level objective at all times.

Safeguard

Security, ethical review, bias detection, regulatory compliance, and trust

Safeguard runs inside all four of the other flows rather than being reviewed at the end of a project. Automated security scanning of AI-generated code in CI is the floor. Periodic audits are simply insufficient when the rate of introducing vulnerabilities is so high. For products with AI-powered features, the EU AI Act has specific obligations around auditability, transparency, and human oversight. Safeguard is not a compliance function outside the team. It is the actual team executing their default capability: asking every day, on every initiative, “what could go wrong, for whom, how would we know, and what would we do.”

Values

If the Agile Manifesto is the reference most people look to today, then the values of the Fluid Lifecycle are an extension of what already exists:

| We value | Over | |

|---|---|---|

| Validated outcomes | › | Delivered features |

| Intent and judgment | › | Implementation velocity |

| Human-AI collaboration with clear accountability | › | Full delegation or full manual execution |

| Governance built into the work | › | Compliance reviewed at phase end |

| Adaptive flow by context | › | Fixed ceremonies on a fixed calendar |

| Documented solution options | › | Early commitment to one approach |

| Transparency about what AI did vs. what humans decided | › | Implicit trust in AI output |

12 Principles

- Ship outcomes, not features. Define success before you build, not after.

- Explore alternatives cheaply. Option generation is near-free, use it.

- Deliver at the frequency the problem demands, not the frequency the calendar allows.

- Judgment is the necessary bottleneck. Protect it. Do not automate it away.

- Supervise AI in proportion to the stakes. AI is a capable but extremely fallible collaborator.

- Small teams with clear intent outperform large teams with established process.

- Write for disposability. Intent outlasts implementation, so document intent.

- Security scanning is table-stakes infrastructure for AI output.

- Governance is a flow, not a gate. Embed it or it does not happen.

- Ask “did it solve the problem” before asking “did it pass the tests.”

- Know what is newly possible before a problem arrives. Keep the toolbox stocked.

- Match the scope of delegation to demonstrated reliability. Review the human-AI split regularly.

What the Fluid Lifecycle Does Not Solve

Complexity Bias

When building is cheap, teams build more. More features, more options, more configuration. Complexity has always been the enemy of good products. AI does not make that easier to resist. It makes it harder. No framework solves discipline problems.

Junior Development Pipeline

If juniors develop judgment by doing implementation work and AI does more of the implementation, how does the next generation develop? There is no settled answer. Early evidence suggests juniors who engage with AI as a learning tool rather than a shortcut develop faster. Whether that holds at scale is not yet known.

Outcome Measurement Lag

Steer assumes you can observe outcomes in a reasonable timeframe. Many product decisions take months to show their consequences. Shipping faster creates pressure to evaluate earlier than the data supports. Be explicit about time horizons when defining success. Resist substituting usage metrics for outcome metrics.

Moving Forward

The Fluid Lifecycle is a diagnostic first. If you map your current team against the five flows and ask which is being neglected, the answer will be clear and actionable. For most teams it is Sense. For AI-augmented teams, Safeguard is the second gap.

The framework’s claim is narrow and specific. AI has moved the bottleneck. Frameworks optimized for when building was hard are now optimizing the wrong thing. The Fluid Lifecycle is organized around deciding what to build, validating that it worked, and governing the process honestly.

The current discourse is lively because we are all aware that what used to work is no longer working, and what is working still has precedent, just hidden or with a different coat of paint. I hope this framework helps move the discussion around agile and AI further forward.

-Sang

Sources

Steve Jones, ‘AI Killed the Agile Manifesto’, Medium, covered by InfoQ, February 2026

Jason Yip, ‘Continuous Discovery, Continuous Design, Continuous Delivery (CD3)’, Medium, February 2026

Casey West, ‘The Agentic Manifesto’, caseywest.com, 2025

Kent Beck, ‘Augmented Coding: Beyond the Vibes’, Substack, 2025

METR, ‘Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity’, July 2025

Faros AI, ‘The AI Productivity Paradox Research Report’, 2025

Forrester, ‘State of Agile Development 2025’

Teresa Torres, ‘Continuous Discovery Habits’, 2021

AWS Prescriptive Guidance, ‘Strategy for Operationalizing Agentic AI’, 2026

EU AI Act, full enforcement August 2025

NIST AI Risk Management Framework (AI RMF 1.0), 2023

Deloitte, ‘Tech Trends 2026: The Great Rebuild’